At the recent QCon San Francisco, Adi Polak, the Director of Advocacy and Developer Experience Engineering at Confluent, delivered an insightful presentation titled "Stream All the Things—Patterns of Effective Data Stream Processing." Polak's talk centred on the ongoing challenges faced by organisations in the realm of data streaming, alongside pragmatic solutions that could enhance the management of scalable and efficient data streaming pipelines.

Despite witnessing significant technological advancements over the past decade, the task of data streaming continues to be a substantial hurdle for many businesses. It has been reported that teams dedicate as much as 80% of their time addressing issues such as downstream output errors and suboptimal pipeline performance. In her presentation, Polak outlined the essential expectations for an optimal data streaming solution, which include reliability, compatibility with diverse systems, low latency, scalability, and high-quality data.

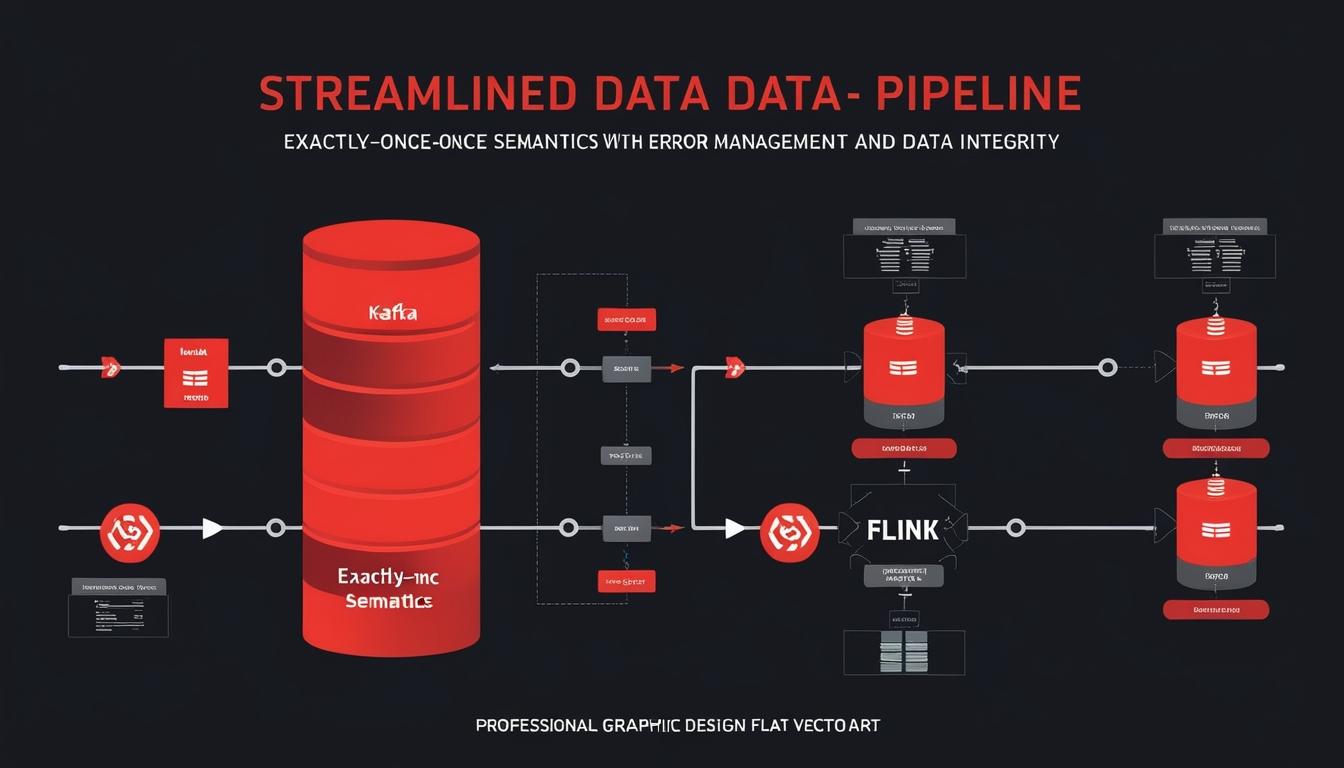

To meet these expectations, organisations must confront several key challenges, including issues related to throughput, real-time processing, data integrity, and error handling. The session explored sophisticated concepts such as exactly-once semantics, join operations, and the necessity of maintaining data integrity as companies adapt their infrastructures to accommodate AI-driven applications.

Polak introduced a number of design patterns aimed at navigating the complexities associated with data streaming pipelines. Among these were the use of Dead Letter Queues (DLQs) for managing errors, as well as methodologies for guaranteeing exactly-once processing across different systems.

One of the cornerstones of reliable data processing discussed by Polak was achieving exactly-once semantics. She compared legacy Lambda architectures with contemporary Kappa architectures, which provide more deterministic management of real-time events, state, and time. The implementation of exactly-once guarantees can be realised through two-phase commit protocols using systems such as Apache Kafka and Apache Flink. This approach consists of pre-commits carried out by operators followed by a system-wide commit, thereby maintaining consistency even amid component failures. Additionally, the use of windows-based time calculations—such as tumbling, sliding, and session windows—was emphasised as a method to further enhance deterministic processing.

Another challenging aspect of data streaming, the joining of data streams—whether from stream-batch combinations or between two real-time streams—was thoroughly addressed. Polak underscored the criticality of meticulous planning in order to achieve seamless integration while adhering to exactly-once semantics during these joins.

Data integrity emerged as a fundamental principle for establishing trustworthy pipelines. The notion of "guarding the gates" was introduced, highlighting the significance of schema validation, versioning, and serialization facilitated by a schema registry. Such mechanisms aim to uphold physical, logical, and referential integrity, mitigating the risks of errors affecting data quality. Polak showcased pluggable failure enrichers, including automated error-processing tools integrated with platforms like Jira, which serve to label and systematically resolve errors.

In concluding her presentation, Polak examined the burgeoning intersection of data streaming and AI-driven applications. She drew attention to how the success of various AI systems—including those focused on fraud detection, dynamic personalisation, and real-time optimisation—depends on robust, real-time data infrastructures. With this in mind, she placed an emphasis on the necessity of designing pipelines that are capable of meeting the high throughput and low-latency requirements associated with AI applications.

Polak summarised her key takeaways for effective data streaming, urging participants to prioritise data quality and implement DLQs for error management, ensure exactly-once guarantees through resilient architectures, conduct thorough planning for join operations, and lay the groundwork for healthy error handling through clear labelling and systematic resolutions.

Source: Noah Wire Services