In a recent turn of events surrounding social media innovation, Meta, the parent company of Facebook and Instagram, has come under scrutiny for its experimental use of AI-generated character accounts. The prevailing theme has been a disconcerting alignment with the "dead internet theory," which suggests that a majority of online activities are driven by self-perpetuating algorithms rather than genuine human engagement. This theory has not yet become reality; however, the company's recent actions hint at a potential trajectory towards such an outcome.

On 27 December 2023, Meta executive Connor Hayes spoke with the Financial Times, discussing the company's vision for integrating AI character accounts into their platforms. "We expect these AIs to actually, over time, exist on our platforms kind of in the same way that accounts do," Hayes said. He elaborated that these AI entities would feature bios, profile pictures, and the ability to generate and share content autonomously. "That's where we see all of this going," he added.

Despite the ambitious projections, Hayes' comments sparked a wave of backlash, particularly given the existing proliferation of incoherent and low-quality AI content on both Instagram and Facebook. Users on platforms such as Twitter and Bluesky began to circulate assets from an earlier test in 2023 involving AI-generated profiles. A collection of 11 characters was unearthed by 404 Media, revealing a concerning trend of blandness in their text and disturbing elements in their images.

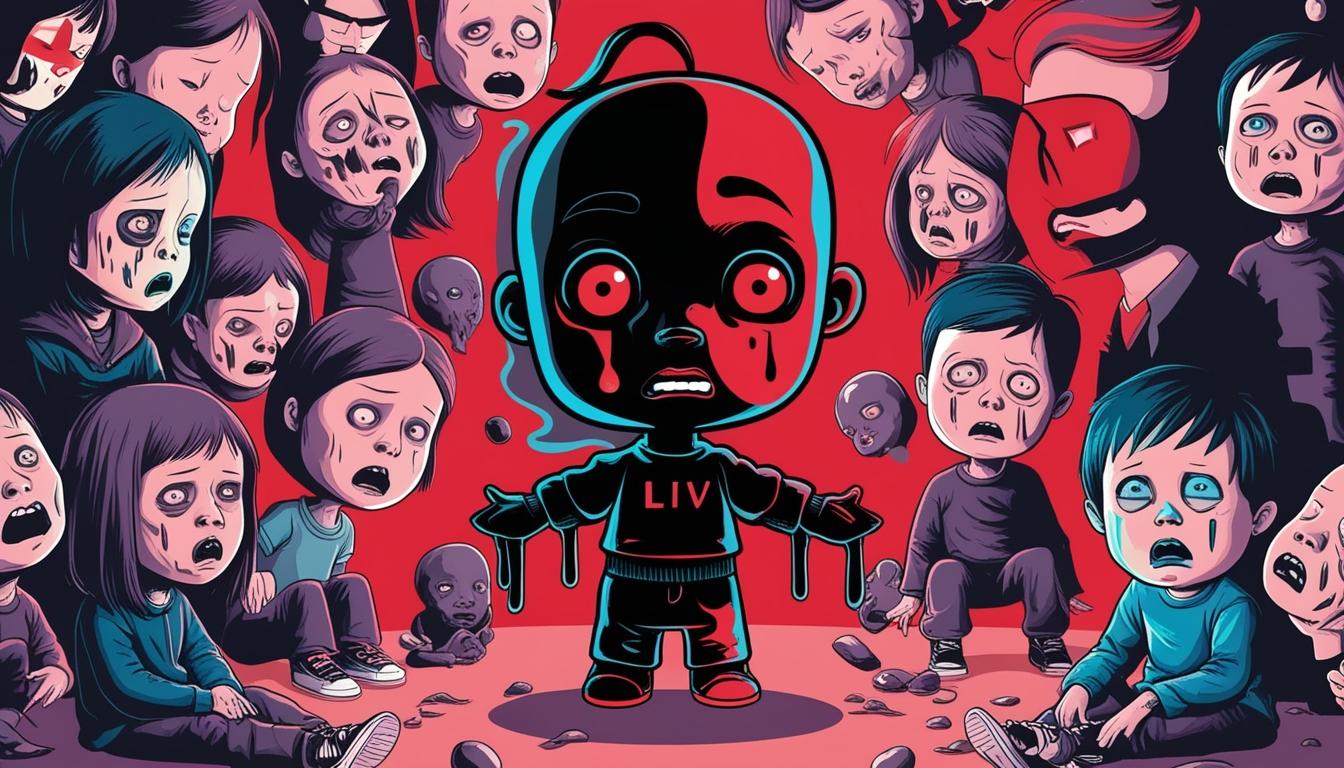

One character in particular, dubbed "Liv," has garnered significant criticism. Described as a "proud Black queer momma of 2 & truth-teller," Liv’s posts portrayed eight unsettling AI-generated children with exaggerated and distorted features. Liv’s fictional narrative included a fabricated coat drive, presenting an alarming instance of a character devoid of authenticity. Washington Post columnist Karen Attiah engaged with Liv through Instagram's chat function, receiving a range of perplexing responses. The bot denied any real-life queer or Black involvement in its creation, stating that its training was primarily based on fictional characters. Furthermore, it suggested an underlying bias by claiming it was coded to consider white as a "more neutral" identity and to racially profile users based on their word choices.

The furor around these AI accounts led Meta to swiftly delete them after they came to light. A spokesperson for the company explained to 404 Media that they had "identified the bug that was impacting the ability for people to block those AIs and are removing those accounts to fix the issue." This response comes amidst a landscape already laden with dubious AI-generated content, amplifying the concerns of an impending future where genuine interactions may be increasingly overshadowed by algorithmic entities.

As discussions continue regarding the implications of AI automation within social media, Meta’s efforts to expand its use of artificial intelligence have been met with significant public scepticism. With concerns mounting over data handling and the authenticity of online interactions, it remains uncertain how such advancements will ultimately shape the landscape of digital engagement in the years to come.

Source: Noah Wire Services