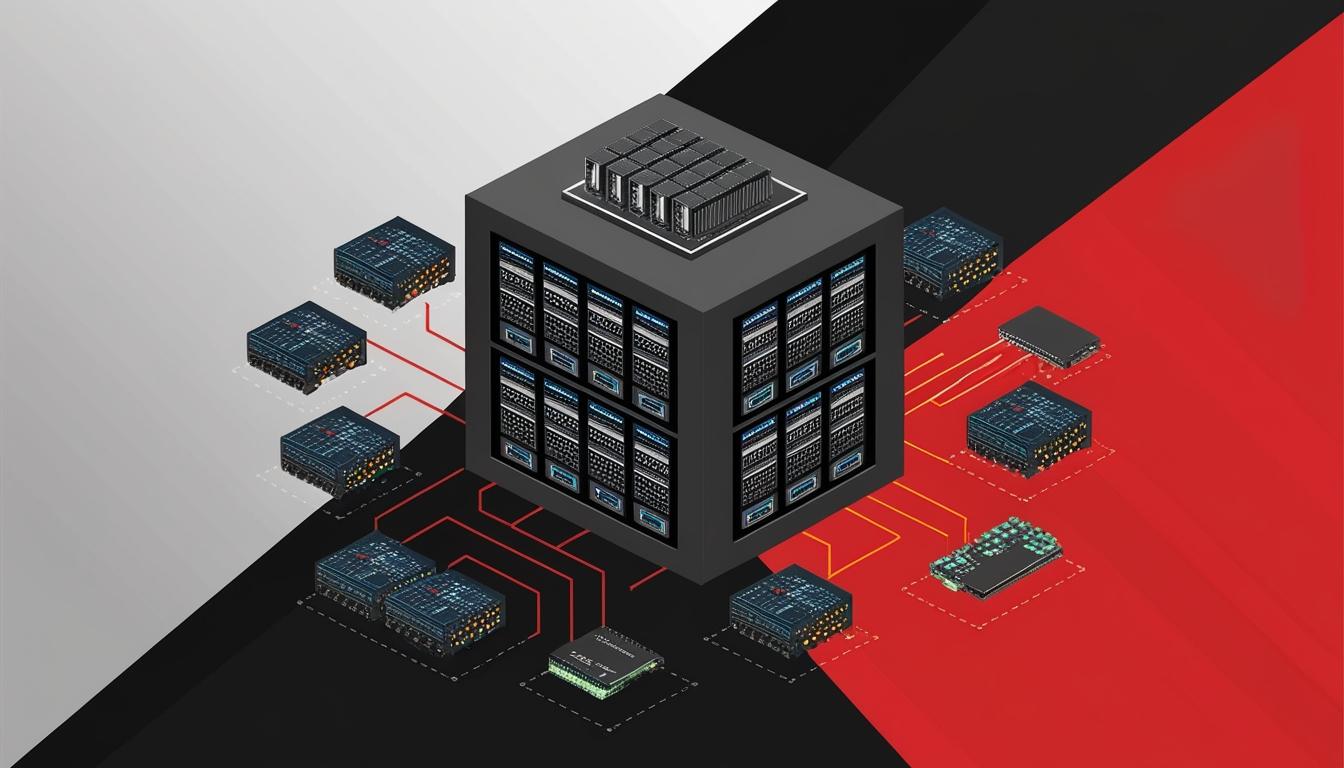

A recent survey indicates that only 21 enterprises have ventured into self-hosting AI technology, with a notable trend emerging in their network infrastructure requirements. Of those currently self-hosting or evaluating the potential for self-hosting, there is a consensus that deploying AI necessitates a specialised cluster of computers equipped with GPUs. This dedicated cluster must be effectively interconnected with primary storage points housing essential business data, presenting a new array of networking challenges, as noted by participants in the survey.

According to insights gathered by Network World, enterprises that have implemented AI solutions report a pronounced demand for bandwidth to support “horizontal” traffic, which exceeds the requirements of conventional applications. This has led many organisations to consider enhancements to their data centre capabilities. Ten of the surveyed entities highlighted that their AI server clusters would require faster Ethernet connectivity and higher-capacity switches. The necessity for new network devices for a functional on-premises AI deployment was acknowledged, with fifteen firms confirming the acquisition of new switches, even for large-scale trials.

Notably, current users of AI self-hosting outlined a key challenge: a tendency to overestimate the scale of their AI clusters. For instance, when utilising large language models (LLMs), it was stated that hundreds of GPUs and multiple servers might be required. In contrast, the third of self-hosting enterprises that have taken a more cautious approach suggest beginning with smaller language models, adding resources incrementally as experience is gained and necessity is established. This careful strategy aims to ensure that only genuinely valuable AI applications are developed, preventing unnecessary expansion that could lead to bloated AI clusters. An anonymous user commented, “Applications otherwise build up, exceed, and then increase, the size of the AI cluster.”

Enterprise self-hosting users universally recognised the importance of segregating AI horizontal traffic from their primary data centre network to mitigate potential congestion impacts on other applications. One enterprise reported that their AI cluster could generate horizontal traffic equivalent to their entire data centre's output, albeit in brief bursts lasting less than a minute. Users emphasised that latency during these bursts could severely affect the overall value of the applications, prolonging both result delivery times and the duration of traffic spikes. A critical observation made during trials suggested that organisations were largely unprepared for the specific network requirements of AI until practical testing was undertaken.

Further complicating the AI deployment landscape is the intricate relationship between the AI cluster and enterprises' core data repositories. This interplay significantly influences the overall impact of the AI cluster on the wider data centre operations. Data movement—dictated by both application type and implementation method—plays a central role in efficiency.

AI and machine learning applications with limited scope, particularly those focused on IT or networking operations and security, typically process real-time data, which, while important, generally involves low-volume telemetry and has minimal impact on overall data centre performance. On the other hand, generative AI applications aimed at business analytics necessitate comprehensive access to core business data, often relying more on historical summaries than on detailed transactional information. This highlights an opportunity for organisations to manage resources effectively by maintaining condensed source data within the AI cluster.

As the landscape of AI automation continues to evolve, these insights shed light on essential considerations for enterprises looking to implement self-hosted AI solutions. The complexities of network architecture, bandwidth requirements, and data integrity strategies will play a critical role in shaping the future of AI applications within business environments.

Source: Noah Wire Services